Opus 4.6 Just Dropped 2 Days Ago. Here's What Founders Need to Know

On Feb 5, 2026, Anthropic dropped Opus 4.6, their most advanced AI model. Every tech publication immediately ran the same story: benchmark scores, developer features, coding performance.

Cool. That’s not what this post is about.

I run three businesses: a travel blog with content across 73 countries, an AI education brand, and a leadership retreat company for founders and executives. I use Claude every day across all of them for content creation, SEO optimization, business strategy, and automation. It’s not a novelty for me. It’s infrastructure, and when it improves, my week gets lighter.

So when a major model upgrade drops, I don’t care about benchmark charts. I care about one thing: what changes for my business on Monday morning? My bar is simple. Does it reduce handoffs, reduce rework, and cut the time I spend re-explaining context?

The answer this time? A lot.

This weekend I’m stress-testing it with real founder chaos: messy notes, conflicting constraints, and the stuff I usually avoid uploading. And from what Opus 4.6 introduced, it’s absolutely going to change how I work.

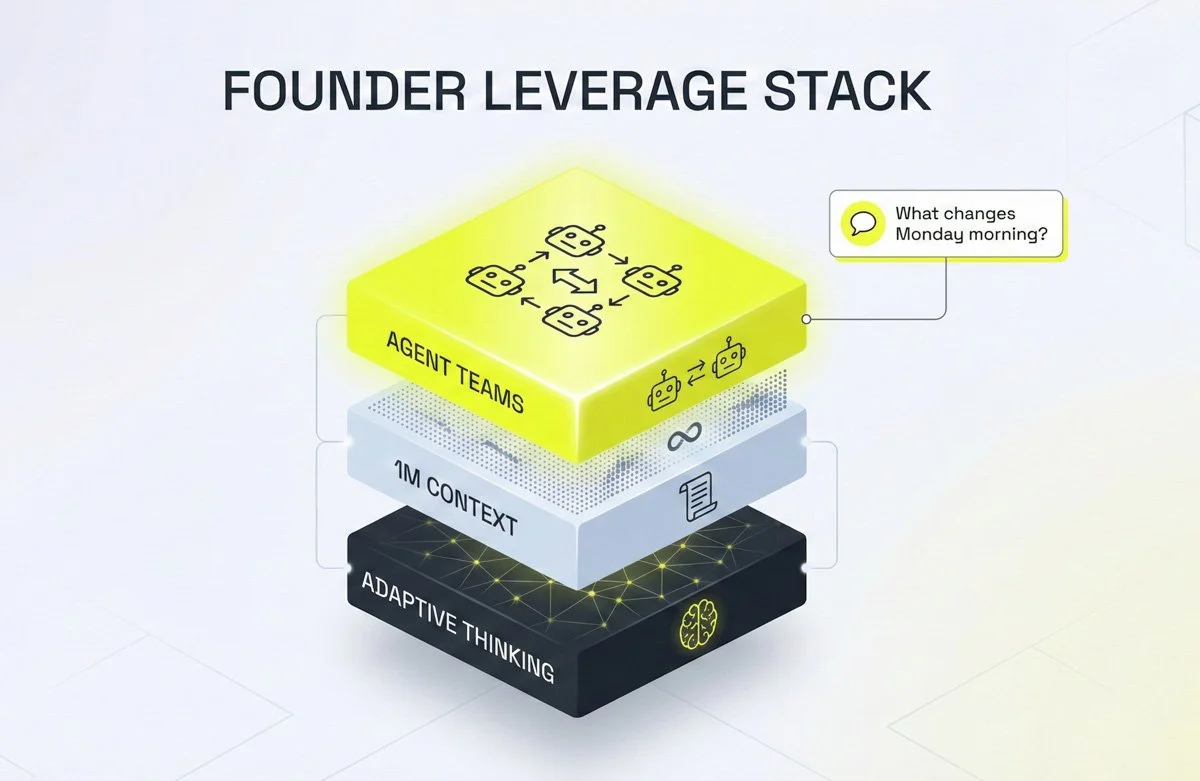

Opus 4.6 introduced three things that actually matter if you’re running a company, not writing code: agent teams (multiple AI workers operating in parallel on the same task), a 1M token context window beta (it can hold your entire business in one conversation), and adaptive thinking (it decides how hard to think based on what you’re asking).

None of the coverage I’ve seen explains what this means for founders. So I’m going to.

If you want the official details: I linked the launch post and a couple third-party writeups at the end. This post is the founder translation.

The 3 upgrades that change founder workflows, not just benchmarks.

The 30-Second Version of Opus 4.6

If you're short on time, here's everything that matters in four bullets. Screenshot this and come back later.

Agent teams. You can now spin up multiple AI agents that work on the same project simultaneously. One researches, one writes, one optimizes…and they coordinate with each other autonomously. Think of it like hiring a team that works overnight and doesn't need a Slack channel. The first shift for me is psychological. I stop writing prompts and start assigning roles.

1M token context window. Previous versions of Opus maxed out at 200K tokens. That's roughly 150,000 words. Opus 4.6 can hold 750,000 words in a single session. That's your Q1 plan, your financials, your customer research, your product roadmap, AND the conversation about all of it. No more "sorry, I lost the thread" halfway through a complex project.

Adaptive thinking. Claude now reads the room. Ask it a quick factual question? Fast, efficient answer. Ask it to analyze a messy competitive landscape? It thinks deeper, takes more time, gives you something worth reading. You no longer have to choose between speed and depth. The model makes that call for you.

Knowledge work, not just code. This is the part nobody's talking about. Opus 4.6 outperforms GPT-5.2 by roughly 144 Elo points on real-world business tasks. This includes things like finance, legal, research, analysis. That's not a marginal improvement. That's a different tier. And Anthropic's own Head of Product admitted that non-developers are now a massive segment of Claude's user base. This model was built with us in mind.

That last point is worth sitting with. For the first time, the most powerful AI model on the market wasn't designed primarily for engineers. It was designed for people who run things. As someone who is more of a big picture idea guy, I love this!

Now let me break down each of these and show you what they actually look like in practice.

Agent Teams: Your AI Workforce

This is the feature that made me stop what I was doing on Wednesday.

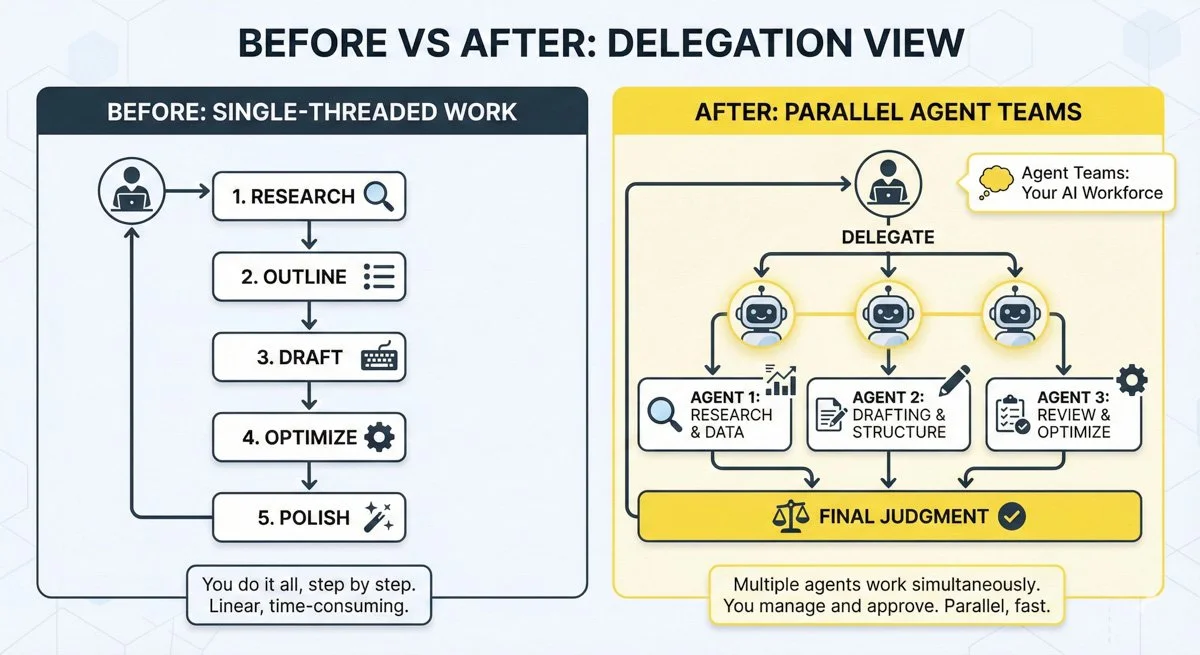

Agent teams is exactly what it sounds like. Instead of one AI working through your task step by step, you can now spin up multiple Claude agents that each take ownership of a piece and work simultaneously. One agent acts as the team lead. It breaks the project into parts, assigns them, and coordinates the output.

Let that sink in. You're not prompting a chatbot. You're managing a team. I’m already rewriting my workflows as if I have a junior analyst, a researcher, and an editor on call. That’s what this enables.

Here's what that looks like in practice for someone running a business…not writing code:

Say you're preparing for a major pitch. Normally you'd spend a full day pulling together the research, drafting the deck narrative, checking the financials, and writing follow-up emails. With agent teams, you describe the project once. One agent pulls market data and competitive intel. Another drafts your talking points. A third reviews your financials for inconsistencies. They run in parallel. You come back to a coordinated first draft of everything.

Or take content. I produce SEO-optimized travel content across dozens of destinations. With agent teams, one agent can research keyword gaps, another can draft the post structure, and a third can generate meta descriptions and social copy…all from the same brief, at the same time.

The real-world results are already wild. Rakuten turned Opus 4.6 loose on their engineering organization….roughly 50 people across six code repositories. In a single day, it autonomously closed 13 open issues and routed 12 others to the right team members. It made both product decisions and organizational decisions. And it knew when to stop and escalate to a human.

Read that again. It didn't just do the work. It figured out what work needed doing, who should do it, and what was above its pay grade.

Anthropic's own researchers tested agent teams by having them build an entire C compiler from scratch… multiple agents working in parallel, with minimal human supervision. They mostly walked away and came back to a working compiler. That's not a demo. That's a proof of concept for autonomous work.

The honest caveat…

Agent teams are in research preview right now. They work best on tasks that split cleanly into independent pieces…research, reviews, analysis, content creation. If multiple agents need to edit the same document or file simultaneously, you'll run into conflicts. For those tasks, single-agent is still the move.

But here's my take on why this matters beyond the feature itself: agent teams change the mental model. You stop thinking "what can I ask this AI?" and start thinking "what can I delegate to this team?" That's a fundamentally different relationship with the tool. And the founders who internalize that shift first are going to operate at a completely different speed.

The shift: from doing work to managing a workflow

The 1M Context Window, Explained for Humans

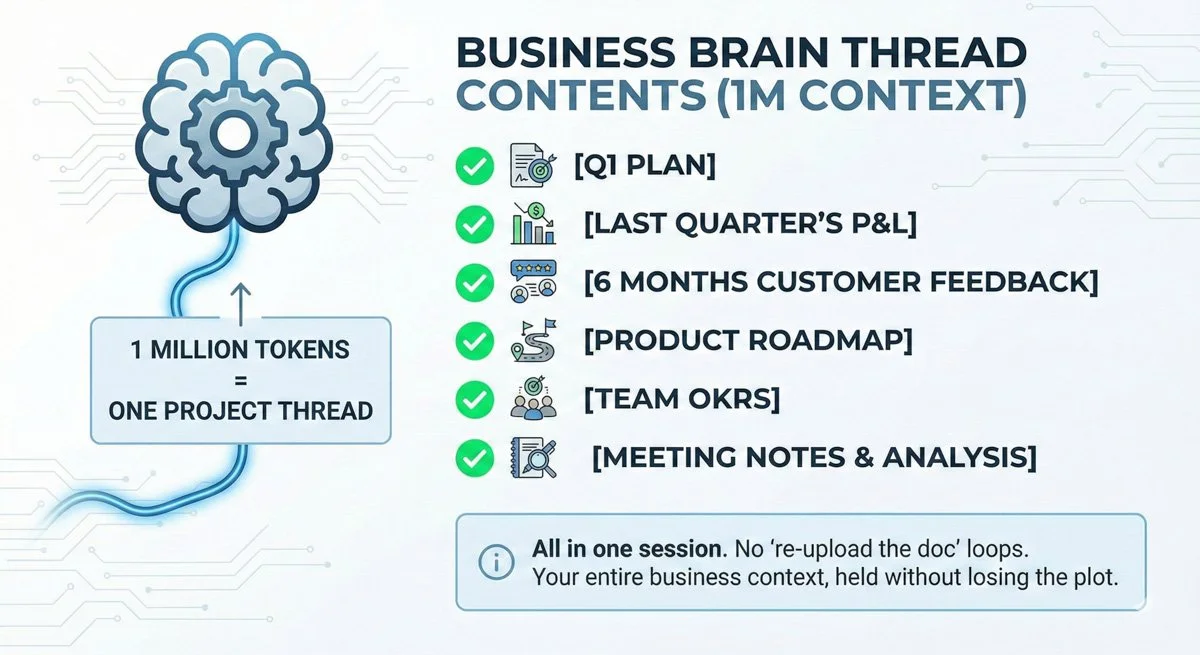

If you've ever been deep into a complex conversation with Claude — feeding it your strategy doc, your financials, your customer research — and had it suddenly forget what you told it twenty minutes ago, you know the pain. I hit this constantly across all three businesses. One long strategy thread, and suddenly the most important constraint vanishes.

That was a context window problem. The model could only hold so much information before older details started falling off the edge. Previous Opus models topped out at 200K tokens. Useful, but limiting for anyone doing serious business work. I’ve had the same issue with other LLMs- ChatGPT, Gemini, Grok, etc.

Opus 4.6 takes that to 1 million tokens. For me, that means fewer ‘re-upload the doc’ loops. It means one thread can actually become the project.

Let me make that tangible. One million tokens is roughly 750,000 words. That's about ten full-length books. Or, more relevantly for you: your entire business plan, last quarter's P&L, six months of customer feedback, your product roadmap, your team's OKRs, and the conversation analyzing all of it. In one session. Without Claude losing the plot.

The previous version scored 18.5% on a long-context retrieval test. Basically how well the model remembers specific details buried deep in a conversation. Opus 4.6 scores 76%. That's not an incremental improvement. That's the difference between an assistant who skims your docs and one who actually reads them. And yes, I care way more about this than raw IQ. Smart without memory is just confident chaos.

The practical win: one thread becomes the project.

Thomson Reuters put it well. They said Opus 4.6 handled "much larger bodies of information with a level of consistency that strengthens how we design and deploy complex research workflows." Translation: it doesn't drift. It doesn't forget your constraints. It holds the whole picture.

Here's why this matters more than people realize. The biggest limitation of AI for business has never been intelligence. It's been memory. You could have the smartest model in the world, but if it forgets your pricing strategy halfway through building your pitch deck, it's useless for real work.

That ceiling just got five times higher.

The fine print: Standard pricing ($5/$25 per million tokens on the API) applies up to 200K tokens. Beyond that, it bumps to $10/$37.50. If you're on a Pro or Max subscription, you're covered within your plan limits. For most founder use cases — strategy sessions, document analysis, content projects — you'll stay comfortably under 200K. But the point is that when you need to go deep, really deep, the model can go with you.

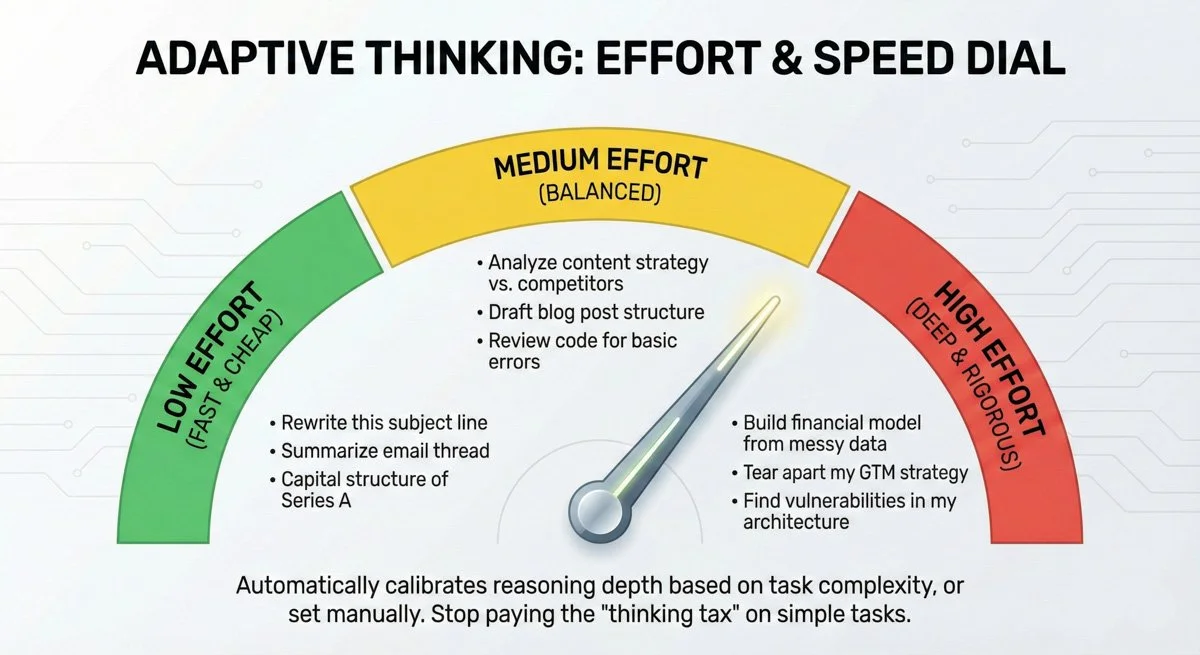

Adaptive Thinking: The Feature Nobody's Talking About

This one isn't flashy. No cool name. No demo video. But it might be the feature that saves you the most time and money day to day.

Here's the problem it solves. Before Opus 4.6, Claude used the same depth of reasoning for everything. Ask it what time it is in London? Deep thinking. Ask it to tear apart your go-to-market strategy? Same depth of thinking. That's wildly inefficient. You're waiting 30 seconds for an answer that should take three, or you're getting a surface-level response when you needed something rigorous. I feel this every day in micro-moments. The tiny tasks add up, and latency quietly taxes your attention.

Adaptive thinking fixes this. Opus 4.6 now reads the complexity of your request and calibrates how hard it thinks. Simple question, fast answer. Complex analysis, deep reasoning. Automatically.

It's like the difference between a new hire who spends four hours formatting a simple email and a seasoned operator who knows when to move fast and when to slow down and get it right. Opus 4.6 is the seasoned operator. That’s the role I actually want AI to play. Not a genius intern, a calm operator who knows what matters.

For founders on a Pro or Max subscription, this means noticeably faster responses on everyday tasks. You'll feel it immediately. The quick stuff — "rewrite this subject line," "summarize this email thread," "what's the capital structure of a Series A" — comes back almost instantly. The heavy stuff like "analyze my content strategy against these three competitors and tell me where I'm vulnerable" gets the time and depth it deserves.

If you're using the API to run automations, the impact is even more direct. Anthropic's own data shows that at medium effort, Opus 4.6 matches the previous model's performance while using 76% fewer output tokens. Fewer tokens means lower cost. Same quality, fraction of the price. If you're running any kind of AI workflow at scale, that math changes everything.

You can also set effort levels manually — low, medium, high — depending on the task. Running a quick batch of meta descriptions for blog posts? Low effort, fast and cheap. Building a financial model from messy data? Crank it to high. You're in control.

The bottom line: you no longer pay a "thinking tax" on simple tasks just because the model is powerful. It finally knows when to sprint and when to go deep.

Sprint for the quick stuff. Slow down for strategy.

Beyond Code: The Real Story Nobody's Writing

Here's a quote that should be getting way more attention than it is.

Scott White, Anthropic's Head of Product, said this about Claude Code: "We noticed a lot of people who are not professional software developers using Claude Code simply because it was a really amazing engine to do tasks."

That line describes me. I’m not trying to become an engineer, I’m trying to ship outcomes faster.

Read between the lines. The most powerful AI tool on the market was built for developers…and the business people showed up anyway. They fought through terminal interfaces and command-line syntax because the underlying capability was that good.

Anthropic noticed. And Opus 4.6 is their response.

This model isn't just smarter at code. It's dramatically better at the work founders, operators, and knowledge workers actually do every day. The benchmarks back this up across the board:

Box tested it on multi-source analysis across legal, financial, and technical content. They saw a 10% performance lift…hitting 68% accuracy versus a 58% baseline. In technical domains, it scored near-perfect.

Harvey, an AI legal platform used by major law firms, ran it through BigLaw Bench. Opus 4.6 hit 90.2% — the highest score of any Claude model. 40% of its answers were perfect. 84% scored above 0.8.

And it doesn't just analyze. It creates. Opus 4.6 produces documents, spreadsheets, and presentations that look like a senior analyst made them. Anthropic says the model "understands the conventions and norms of professional domains." That's corporate speak, but it's accurate. The outputs actually look professional. Not AI-generated-professional. Actually professional.

Then there's the office tool integration, which is bigger than people realize.

Claude in Excel got a major upgrade. It handles longer, more complex tasks now. It can take messy, unstructured data and infer the right structure without you telling it how. If you've ever spent two hours cleaning a CSV before you could even start analyzing it, that pain is gone.

Claude in PowerPoint is brand new. Claude now lives as a side panel inside PowerPoint. You build the deck with AI assistance in real time — no more creating a presentation in Claude, exporting it, and then editing it separately. It's native. This matters because decks are where strategy goes to die. If the tool lives inside the deck, the strategy survives contact with reality.

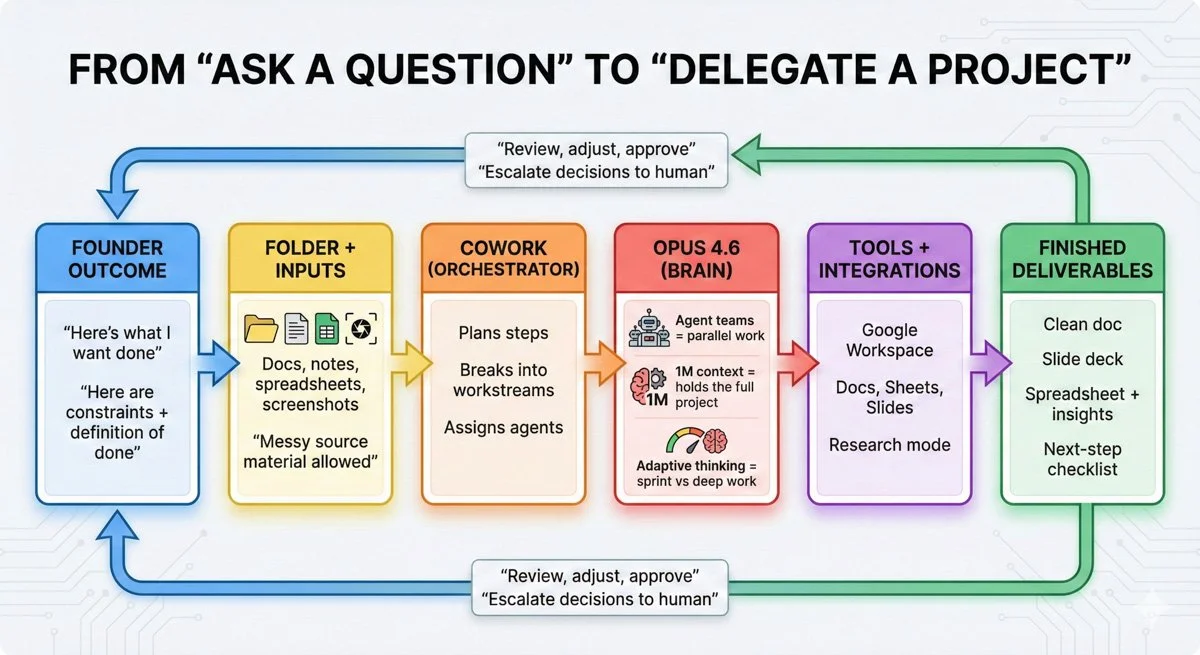

Now stack all of this on top of Cowork.

If you missed it, Anthropic launched Cowork on January 12th. It's built on the same technology as Claude Code, but designed for non-developers. You point it at a folder on your Mac, describe what you want done, and walk away. It plans, executes, and delivers finished work. Now it's running on Opus 4.6.

Cowork is the interface. Opus 4.6 is the brain. Together, it's the most powerful non-developer AI setup that exists right now. You describe the outcome. It does the work. That's literally how it operates. This is the closest I’ve seen to ‘delegate a project’ instead of ‘ask a question.’ That’s a different category of product.

The shift here is significant. For the past two years, the AI conversation has been dominated by developers. The tools were built for them. The content was written for them. The use cases were theirs.

That era is ending. And founders who recognize it early will have a real advantage.

Cowork runs the workflow, Opus 4.6 does the thinking, and you stay in the judgment seat, delegating outcomes instead of asking questions.

What This Actually Costs

I'm not going to bury the pricing at the bottom of a FAQ. If you're running a business, cost is context. Here's the full picture.

Free plan: No access to Opus 4.6. You get Sonnet, which is solid for lighter tasks, but you're not getting the model we've been talking about in this post. If you're serious about using AI as a business tool, free isn't going to cut it.

Pro — $20/month: This is where it gets interesting. You get Opus 4.6, Claude Code, Cowork, Google Workspace integration, Research mode, and memory across conversations. For twenty dollars. That's less than most people spend on Spotify and Netflix combined. If you're using AI for more than casual questions, this is the obvious starting point.

Max — $100/month: Five times the usage of Pro. If you're in Claude daily for hours at a time — running content workflows, building automations, doing deep strategy sessions — you'll hit Pro limits eventually. Max gives you the headroom to work without watching the meter. You also get access to agent teams and the 1M context window at higher usage levels.

Max — $200/month: Twenty times the usage of Pro. This is for founders who've made Claude a core part of their operating stack. If Claude is replacing tasks you'd otherwise pay a contractor or VA to do, this tier pays for itself before lunch on day one.

Here's the ROI math I run in my own head.

I use Claude across three businesses every day. Content strategy, SEO optimization, social media drafts, retreat planning, business coaching prep, automation building. Conservatively, it saves me 10 to 15 hours a week. At even a modest consulting rate, that's $1,000 to $2,000 in recaptured time per week. For $20 a month.

Compare that to hiring. A part-time VA costs $500 to $2,000 a month depending on skill level. A junior analyst or content writer costs more. Claude doesn't need onboarding, doesn't take PTO, and just got significantly smarter on Wednesday.

I'm not saying it replaces people. I'm saying it changes what you need people for. The repetitive, structured, research-heavy work? Claude handles it. Your team focuses on judgment, relationships, and the things that actually require a human in the room. Now, that's leverage.

Where to Start

I'm not going to leave you inspired but stuck. Here's exactly what to do this week depending on where you are right now.

If you already have a Claude subscription:

Switch to Opus 4.6. In Claude chat, select it from the model dropdown. In Claude Code, type /model and choose it. Takes five seconds.

Then try this. Take your most important business document…your Q1 plan, your investor update, your content strategy, whatever you're actively working on — and upload it. Ask Claude to find the gaps. The inconsistencies. The assumptions you're making that you haven't pressure-tested. Don't ask it to summarize. Ask it to challenge.

I’m looking for the uncomfortable stuff: missing assumptions, weak logic, and the parts I’m emotionally attached to. That’s where the money is.

You'll feel the difference from previous models in the first response. The depth is noticeably different. It catches things you didn't ask it to look for.

If you're brand new to Claude:

Start with Pro. $20 a month. You get Opus 4.6, Claude Code, and Cowork…which is everything we've talked about in this post.

Download the Claude desktop app on macOS. Open Cowork from the sidebar. Point it at a folder — your downloads, a project folder, a pile of scattered notes — and give it a task. "Organize these by topic and create a summary document." Then watch it work.

That first time you walk away from your computer, come back ten minutes later, and find finished work waiting for you? That's the moment it clicks. You stop thinking of AI as a tool you use and start thinking of it as a team member you manage.

The one thing I'd tell every founder reading this:

Pick one repeatable task from your week. Just one. Something you do every week that follows a predictable pattern… writing a status update, prepping for a meeting, creating social content from a blog post, organizing client feedback, cleaning up data. Give it to Claude. See what happens.

Don't try to overhaul your entire workflow on day one. That's how people burn out on new tools. Just find the one task where AI leverage is obvious and start there. Expand from that win.

The founders I work with who actually stick with AI tools all did it the same way. They didn't go wide. They went deep on one thing, got a result that surprised them, and then couldn't stop finding more.

The Bigger Picture of Opus 4.6

Everyone's debating which AI model is best. Opus vs GPT vs Gemini. Benchmark wars. Leaderboard drama.

That's the wrong conversation.

The right question isn't which model is smartest. It's how fast you can encode your business into an AI workflow. Your processes. Your knowledge. Your decision-making patterns. The stuff that currently lives in your head and nowhere else.

Opus 4.6 didn't just get smarter. It got more autonomous. It can hold your entire business context without forgetting. It can spin up a team of agents and coordinate them without you standing over its shoulder. It knows when to think hard and when to move fast.

That's a shift in what's possible for a founder operating alone or with a small team.

The gap between the founders who figure this out in 2026 and the ones who wait is going to be massive. Not because the technology is complicated. It's not. But because the mental model — treating AI as a team you manage, not a tool you query — takes time to build. And the people building it now will be operating at a completely different speed by Q4.

This isn't about technology. It's about leverage.

And it's available right now for $20 a month.

Primary sources (best place to start)

Anthropic: Introducing Claude Opus 4.6

Anthropic: Claude Opus 4.6 System Card

Claude Code Docs: Agent teams (how it works, how sessions coordinate)

Anthropic Engineering: Building a C compiler with a team of parallel Claudes

Claude Help Center: Using Claude in PowerPoint

Claude Help Center: Using Claude in Excel

Anthropic: Claude Opus pricing and API details (includes Opus 4.6)

Trusted coverage (good context, founder-relevant angles)

The Verge: Anthropic debuts new model with hopes to corner the market beyond coding

ITPro: Anthropic reveals Claude Opus 4.6, an enterprise-focused model with 1 million token context window

Reuters: Goldman Sachs teams up with Anthropic to automate banking tasks with AI agents, CNBC reports

Reuters: Anthropic releases AI upgrade as market punishes software stocks